|

I am a third-year Ph.D student in the Key Laboratory of Multimedia Trusted Perception and Efficient Computing, Ministry of Education of China, Xiamen University , advised by Prof. Rongrong Ji. My research interest lies in the 3D Generation and 3D Perception. Email / CV / Google Scholar / Github |

|

|

|

|

* indicates equal contribution |

|

You Shen, Zhipeng Zhang, Yansong Qu, Liujuan Cao arxiv, 2025 [arXiv] [Project Page] [Code] |

|

Dian Chen*, Yansong Qu*, Xinyang Li , Ming Li, Shengchuan Zhang arxiv, 2025 [arXiv] [Code] |

|

Shaohui Dai*, Yansong Qu*, Zheyan Li , Xinyang Li , Shengchuan Zhang, Liujuan Cao ACMMM, 2025 [arXiv] [Code] |

|

Yansong Qu, Shaohui Dai, Xinyang Li , Yuze Wang, You Shen, Liujuan Cao, Rongrong Ji AAAI, 2026 [arXiv] [Code] [Project Page] |

|

Yansong Guo, Jie Hu, Yansong Qu , Liujuan Cao ICCV, 2025 [arXiv] |

|

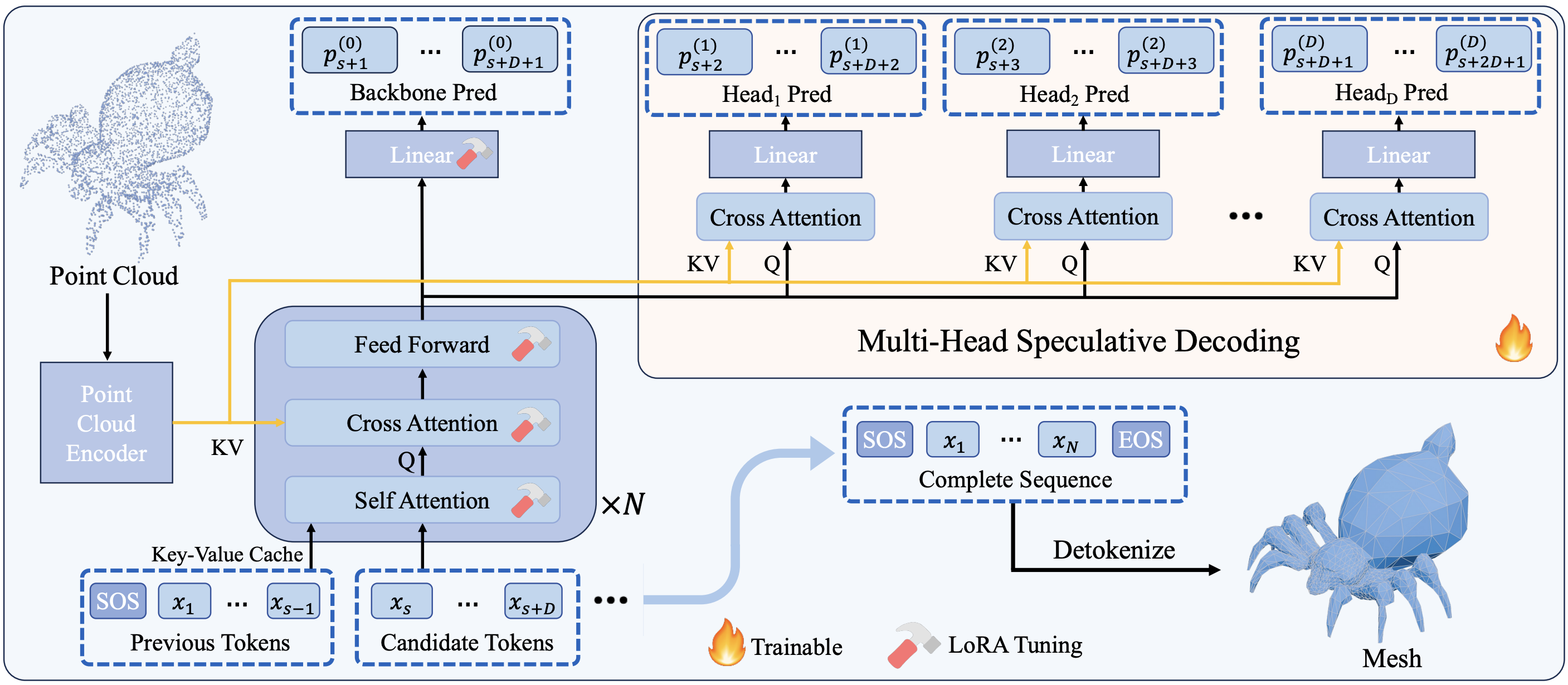

Yansong Qu , Dian Chen, Xinyang Li, Xiaofan Li, Shengchuan Zhang, Liujuan Cao, Rongrong Ji SIGGRAPH, 2025 [Project Page] [arXiv] [Code] |

|

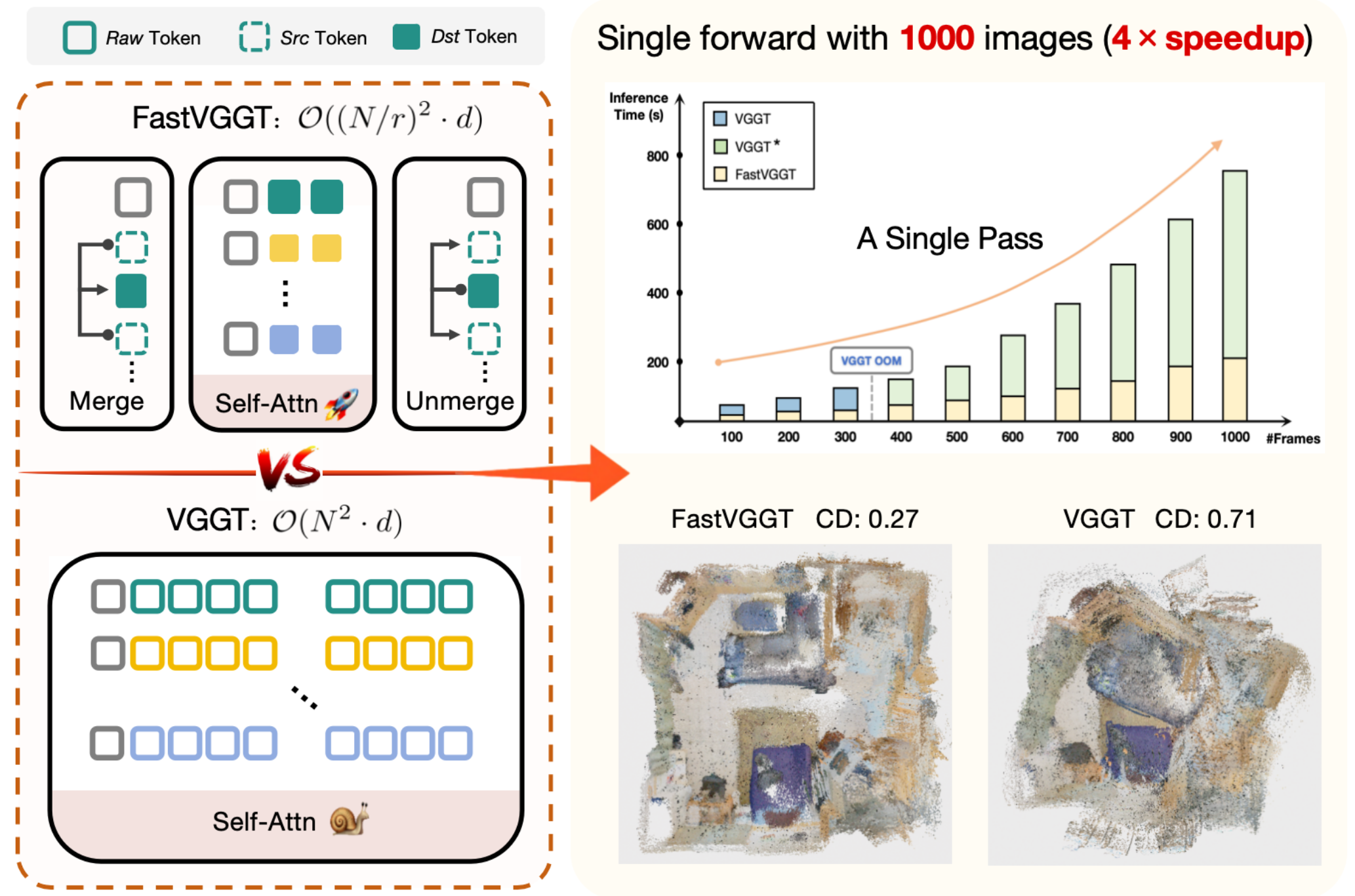

You Shen, Zhipeng Zhang, Xinyang Li , Yansong Qu, Yu Lin , Shengchuan Zhang, Liujuan Cao CVPR, 2025 [arXiv] |

|

Yuze Wang, Junyi Wang, Ruicheng Gao, Yansong Qu, Wantong Duan, Shuo Yang Yue Qi IEEE Virtual Reality (VR) 2025 Best Paper Awards IEEE Transactions on Visualization and Computer Graphics (TVCG), 2025 [arXiv] |

|

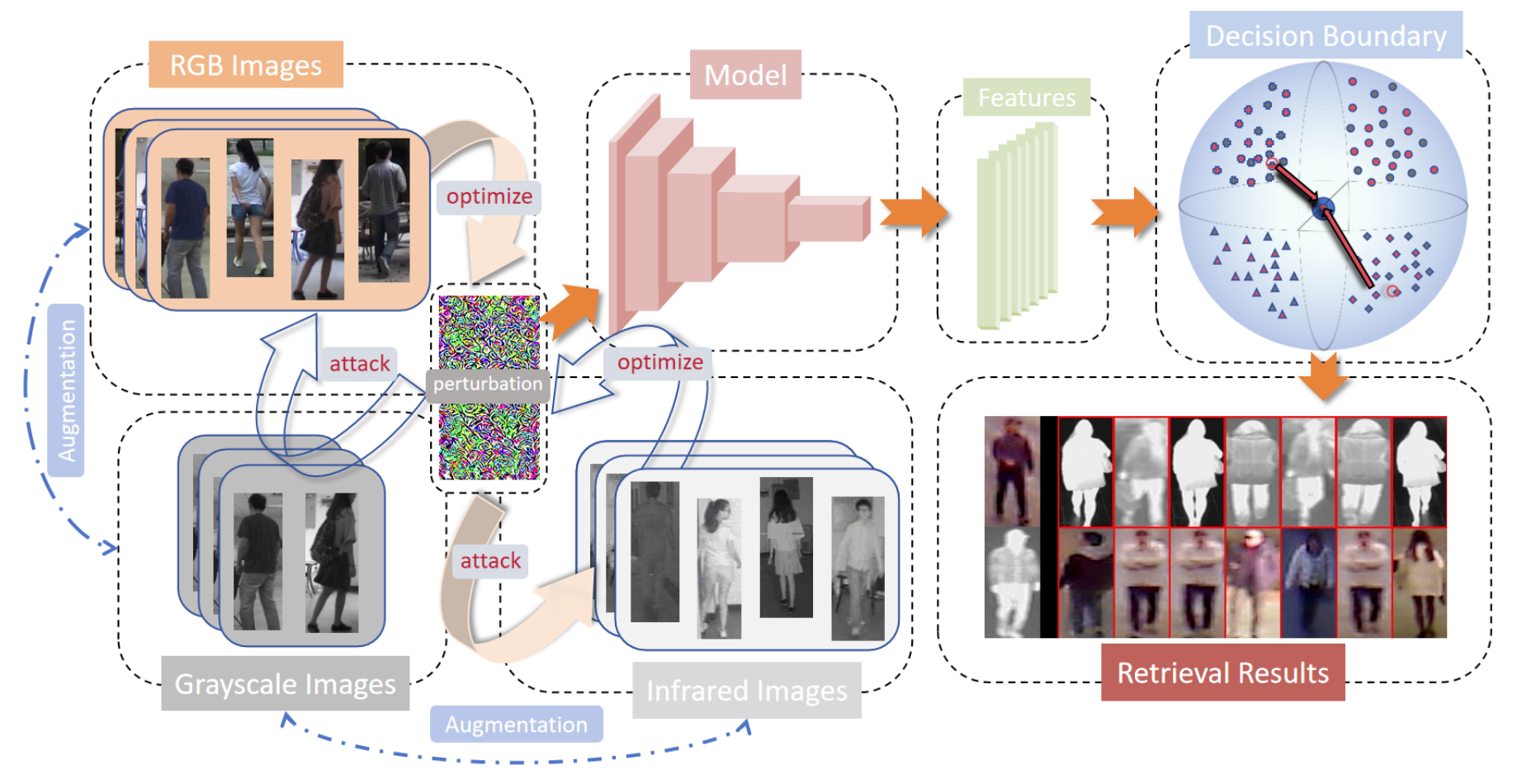

Yunpeng Gong , Zhun Zhong, Yansong Qu, Zhiming Luo, Rongrong Ji, Min Jiang NeurIPS, 2024 [arXiv] |

|

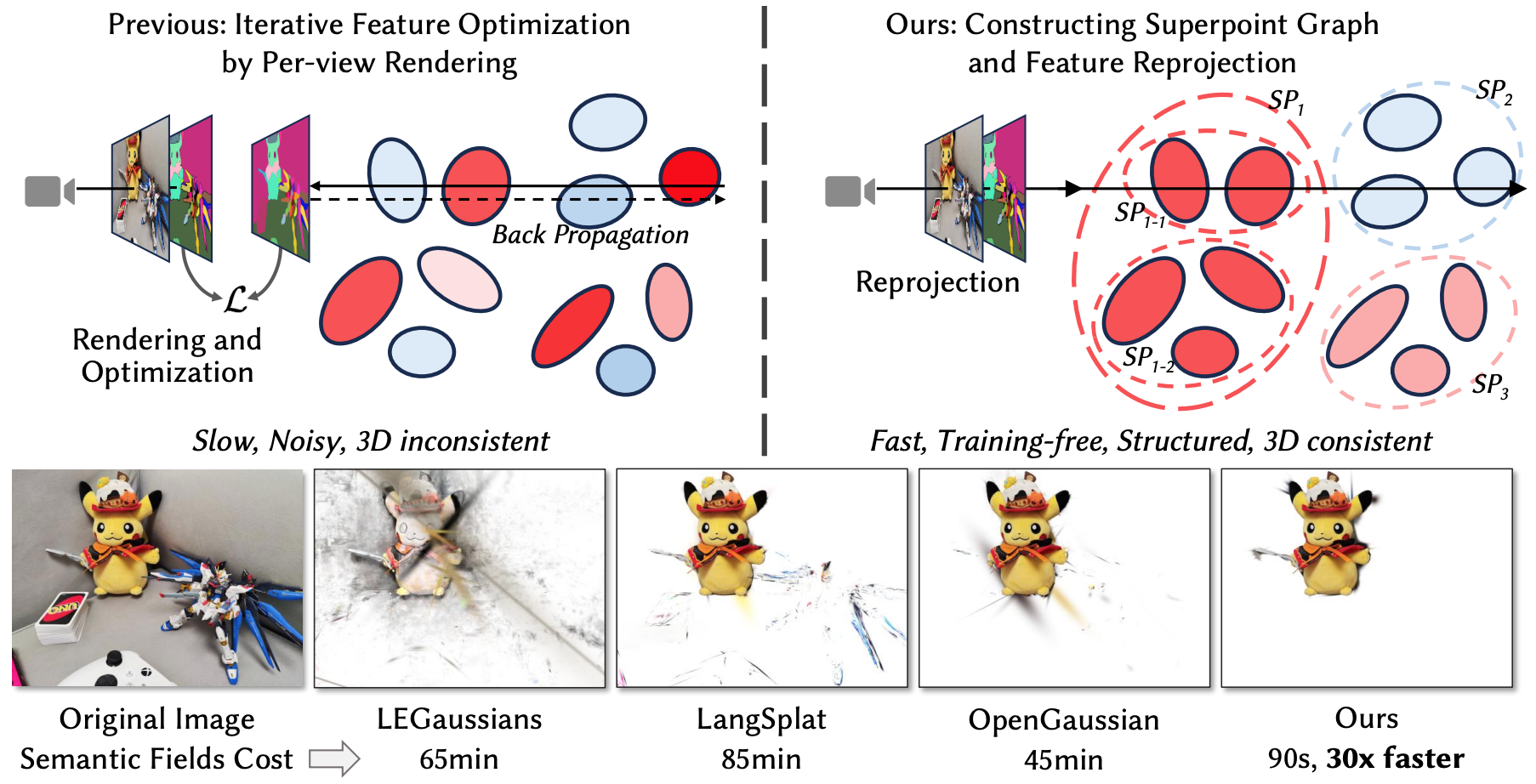

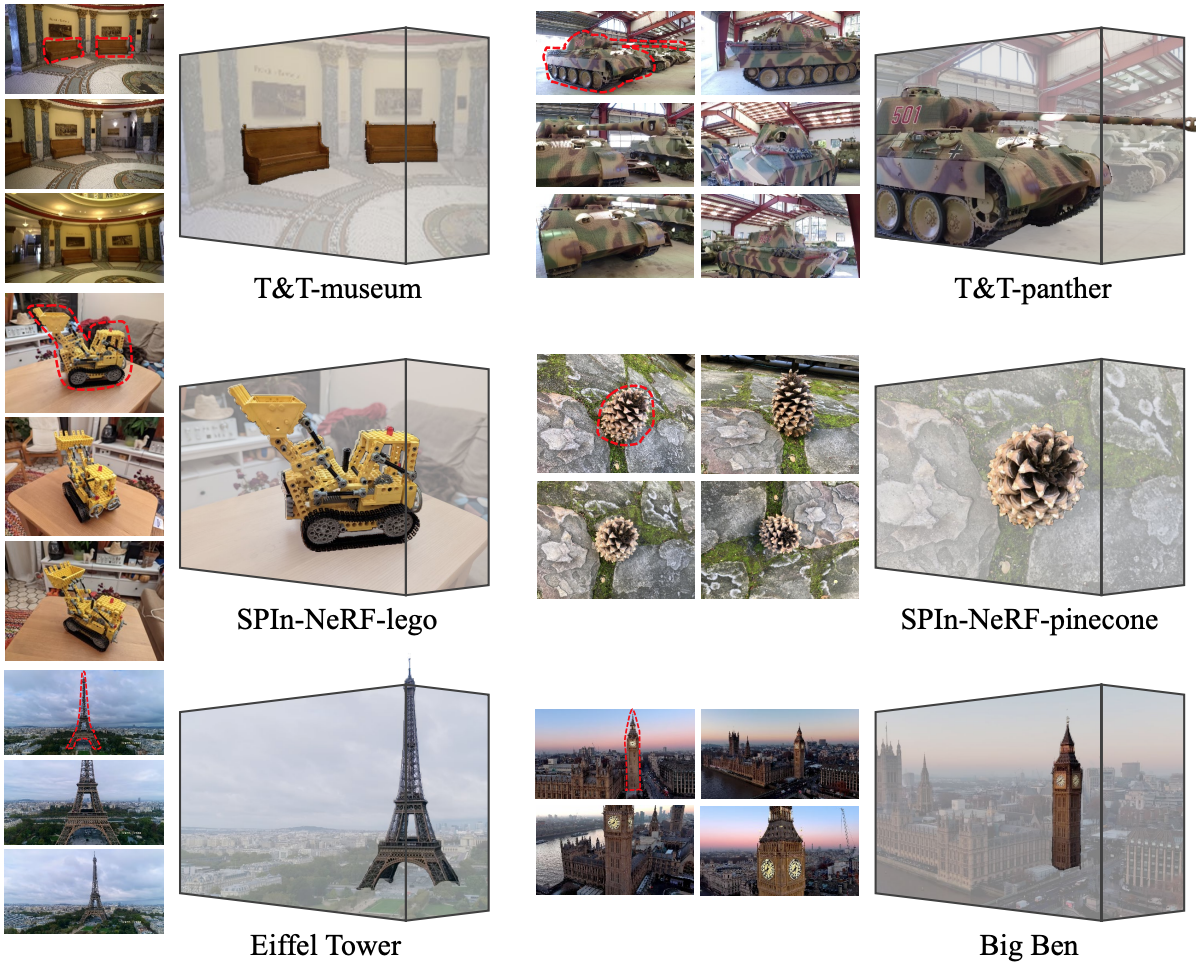

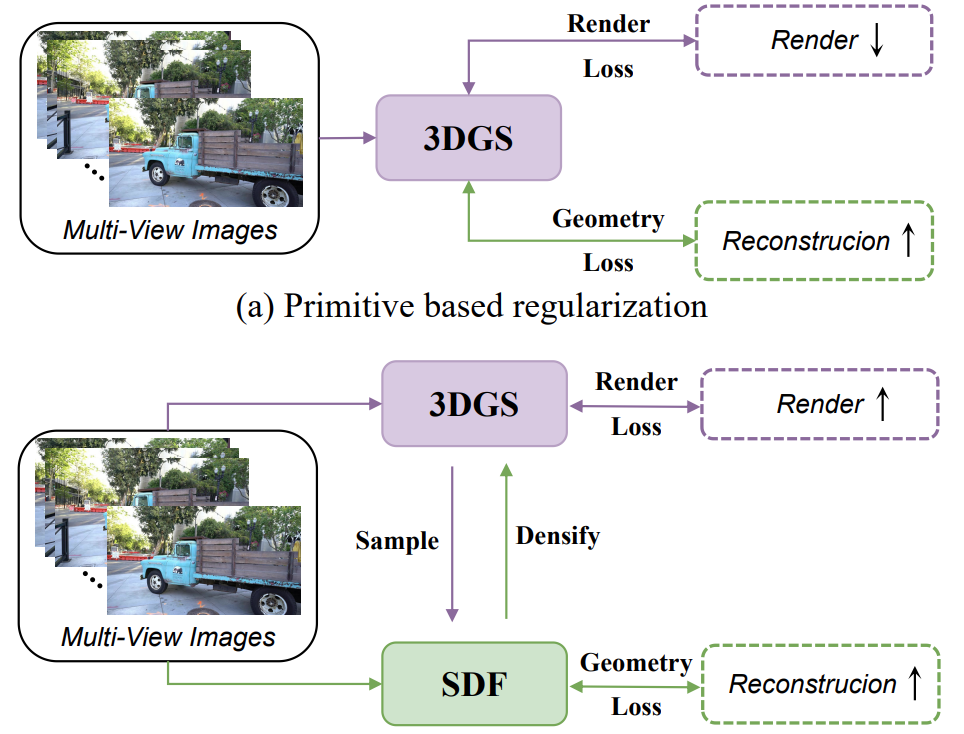

Xinyang Li , Zhangyu Lai, Linning Xu, Yansong Qu, Liujuan Cao, Shengchuan Zhang, Bo Dai, Rongrong Ji NeurIPS, 2024 [Project Page] [arXiv] [Code] |

|

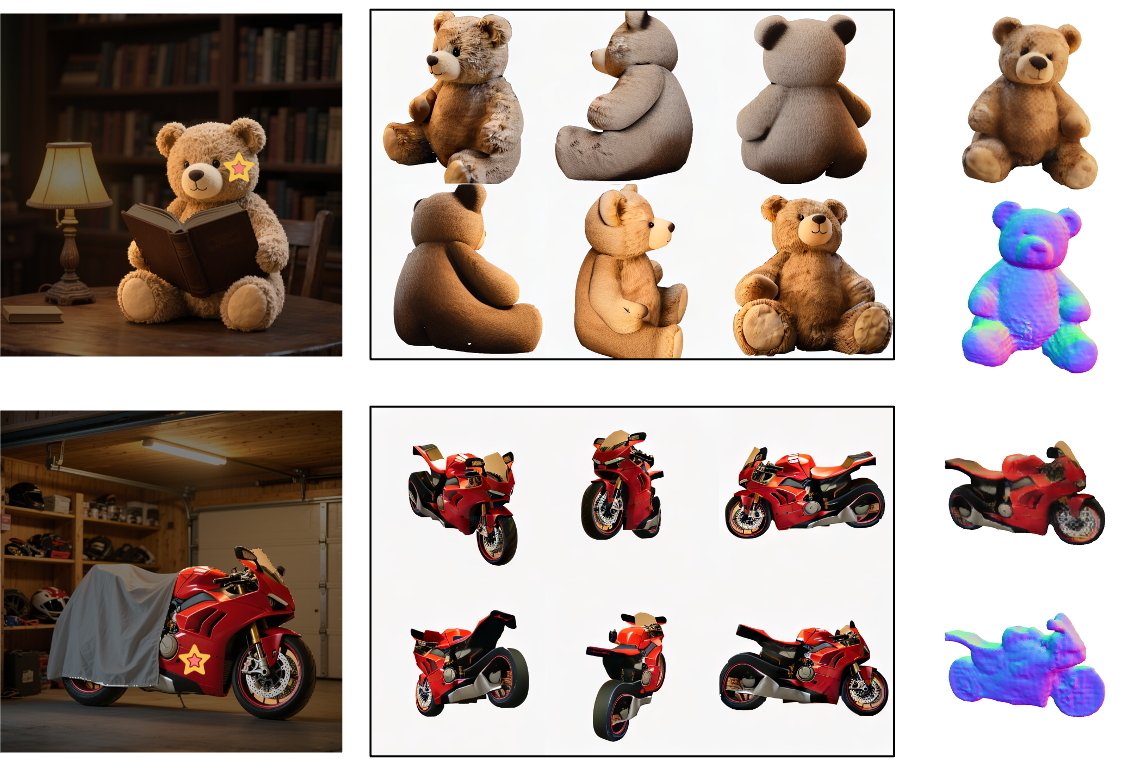

Yansong Qu*, Shaohui Dai* , Xinyang Li , Jianghang Lin , Liujuan Cao, Shengchuan Zhang, Rongrong Ji ACMMM, 2024 [Project Page] [arXiv] [Code] |

|

Conference Review:Journal Review: |

|

|